Do Duplicate Files Slow Down Your Mac?

Is your Mac running slower than it used to? You've checked Activity Monitor, closed unnecessary apps, and even restarted your system, but the sluggish performance persists. While many Mac users focus on RAM usage or CPU load, there's a factor that sometimes goes unnoticed—and is often misunderstood: duplicate files.

Duplicate files primarily waste storage space, but they can also contribute to performance issues in specific scenarios—particularly when combined with other factors like low storage space or very large file counts. This guide explores when and how duplicates impact performance, with accurate technical details and practical solutions.

When Duplicate Files Impact Performance

Understanding the performance impact of duplicates requires accurate technical context. Modern macOS (using the APFS file system) handles duplicates more efficiently than older systems, but duplicates can still contribute to performance issues in specific scenarios.

Indexing Impact: Spotlight's Actual Behavior

macOS uses Spotlight to index files on your system, creating a searchable database. However, the impact of duplicates on Spotlight is more nuanced than it might initially appear.

Important technical context: APFS (Apple File System) uses file cloning (copy-on-write) which shares content blocks when files are duplicated. When duplicate files are created through normal file system operations, APFS can share identical content blocks to avoid storing them multiple times. Additionally, Spotlight uses attribute caches and FSEvents to track file changes efficiently.

This means:

- Metadata Still Indexed: While APFS deduplicates content blocks, Spotlight still indexes file metadata (names, paths, dates, attributes) for each duplicate file separately. This means duplicate files do increase the total number of entries in the Spotlight index, which can affect search result relevance and clutter.

- Content Extraction Impact: For files where Spotlight extracts content (like documents, images), each duplicate file path is processed separately. However, the actual content extraction may benefit from cached results or file system optimizations when blocks are deduplicated.

- Search Result Clutter: The most noticeable impact for users is search result clutter—Spotlight may return multiple copies of the same content in results, making it harder to find the specific file you need.

- Realistic Impact: The performance impact is typically most noticeable when you have very large numbers of duplicate files (tens or hundreds of thousands), combined with other factors like low storage space or older hardware. Modern macOS handles millions of files efficiently, so the impact is usually modest unless you have an extreme number of duplicates.

💡 Technical Note: While APFS uses file cloning to share content blocks when files are duplicated, this happens at the file system level and doesn't eliminate the duplicate file entries in directory structures or metadata indexes. Each duplicate file still exists as a separate file entry, which affects navigation, search results, and user workflow—even if the underlying storage is optimized.

Backup Performance: Time Machine's Actual Behavior

Time Machine is macOS's built-in backup solution that automatically backs up your files. However, the impact of duplicates on Time Machine is more limited than it might seem.

Important technical context: Time Machine uses block-level deduplication and hard links within backup snapshots. When duplicate files contain identical content blocks, Time Machine doesn't store those blocks multiple times in the backup. This means the storage impact of duplicates in backups is significantly reduced compared to what you might expect.

However, duplicates can still have some impact:

- Scanning Overhead: Time Machine still scans and catalogs every file, including duplicates, during backup operations. This scanning process can take longer when many duplicate files are present, potentially extending backup time, especially on the first backup or after major file system changes.

- Metadata Tracking: Even though storage is optimized through deduplication, Time Machine still tracks metadata for each duplicate file separately, which can increase the overhead of managing backup snapshots.

- Limited Storage Impact: The actual storage impact on your backup drive is much less than the total size of duplicate files would suggest, because Time Machine deduplicates identical content blocks. However, if you have many duplicates, the scanning and cataloging process can still be slower.

- Restore Complexity: When restoring from Time Machine, duplicate files may complicate the process slightly, as you'll need to choose which copy to restore if multiple identical files existed.

💡 Technical Note: Time Machine's deduplication occurs within each backup snapshot using hard links for identical files. This means you don't pay the full storage cost for duplicate content in backups, but the backup process still needs to scan and catalog all files, which can extend backup times when many duplicates are present.

Finder Performance: Navigation and File Management

The Finder application is responsible for displaying and managing your files. Modern macOS is highly optimized to handle very large file counts efficiently, so the impact of duplicates on Finder is typically minimal unless you have an extreme number of duplicates in a single folder or pathological folder structures.

Important context: Finder slowdowns are usually caused by pathological folder structures (like having hundreds of thousands of files in a single folder) rather than simply having duplicates scattered across your system. macOS handles millions of files efficiently when they're organized in a reasonable directory structure.

When duplicates can impact Finder:

- Folder-Specific Issues: If you have a folder containing many duplicate files (thousands or tens of thousands), Finder may take longer to render the folder contents, especially in icon or cover flow views. This is more about the total number of files in that specific folder than duplicates per se.

- Search Result Clutter: When using Finder's search feature, duplicate files can clutter results, making it harder to find the specific file you need. This is more of a usability issue than a performance problem.

- Memory Usage (Marginal): Finder maintains file metadata and thumbnails in memory for active folders. While duplicate files do increase this slightly, the impact is usually negligible on modern Macs with adequate RAM. This becomes more noticeable only on systems with very limited RAM and many active folders with large numbers of duplicates.

Application Impact: When Duplicates Matter

Some applications scan directories or index files when they launch. Photo libraries, media players, document managers, and development tools may perform file system scans that can be impacted by duplicate files, though the impact varies significantly by application.

Realistic impact: Most modern applications handle large file counts efficiently. The impact of duplicates is typically noticeable only when:

- Application-Specific Scans: Applications like Photos, iTunes/Music, or development IDEs that scan directories during startup may take longer to launch if they encounter many duplicate files in their target directories. However, most applications only scan specific directories (like your Photos library or project folders), so duplicates outside those directories won't affect launch time.

- Internal Indexing: Apps that build internal indexes or catalogs (like photo management software) may take longer to index when they encounter duplicate files. This is most noticeable during the initial index build or after major file system changes.

- Cache Usage (Minor): Applications that cache file metadata, thumbnails, or previews may store redundant cache data for duplicates. This consumes storage and memory, but the impact is usually modest unless you have an extreme number of duplicates in directories that the app actively monitors.

Important note: The impact on application performance is usually most noticeable when duplicates are present in directories that specific applications actively scan or monitor. Duplicates elsewhere on your system typically have minimal impact on app performance.

Other Considerations: When Duplicates Matter Most

While the performance impact of duplicates is often overstated, there are legitimate reasons to manage duplicate files, particularly related to storage and workflow efficiency.

- Disk Space Consumption: This is the primary and most legitimate concern. Duplicate files consume storage space, which can be a significant issue especially on systems with limited storage. When storage space is low, macOS may activate storage optimization features that can impact performance. Apple recommends maintaining adequate free space for optimal performance. Storage impact is the most tangible and measurable effect of duplicates.

- Backup Storage (Limited Impact): While Time Machine uses deduplication, if you use cloud backup services (iCloud, Dropbox, Google Drive) or other backup solutions that don't deduplicate, duplicates can increase storage costs. However, this varies significantly by backup solution.

- Search Result Clutter: The most noticeable user-facing impact is search result clutter. When searching for files, you may see multiple identical results, making it harder to find the specific file you need. This is more of a workflow and usability issue than a performance problem.

- Workflow Efficiency: Managing duplicate files can be time-consuming. Not knowing which version of a file is the "correct" one, or accidentally working on the wrong copy, can create workflow inefficiencies and confusion.

- System Responsiveness (When Combined with Other Factors): Systems with many duplicate files combined with other factors like very low storage space, older hardware, or extremely large file counts may become less responsive. However, duplicates alone are rarely the primary cause of system-wide slowdowns on modern Macs.

💡 Honest Assessment: The primary reason to remove duplicate files is storage space recovery and workflow efficiency (avoiding confusion about which file is the "right" one). The direct performance impact on modern macOS is typically modest unless you have an extreme number of duplicates or they're combined with other system issues like very low storage space.

Identifying If Duplicates Are Contributing to Issues

Most system slowdowns are not primarily caused by duplicate files. However, there are specific signs that duplicates might be contributing to performance issues, especially when combined with other factors. Here's how to identify if duplicates might be a factor:

Signs That Duplicates Might Be Contributing to Issues

Important: These symptoms can have many causes. Duplicates are rarely the primary culprit, but they can contribute when combined with other factors:

- Search Result Clutter: When searching for files, Spotlight returns multiple identical results, making it hard to find the specific file you need. This is a clear indicator of duplicates, though it's more of a usability issue than a performance problem.

- Full Disk Space Warnings: You receive frequent "Your disk is almost full" warnings. While duplicates can contribute to this, low storage space is often caused by many factors. Checking for duplicates is one way to recover space, but it may not be the only solution.

- Extended Time Machine Backups: Backups take noticeably longer, especially if you've recently added many files. While duplicates can contribute to longer scan times, other factors like file size, network speed (for network backups), or drive speed are often more significant.

- Finder Lag in Specific Folders: Opening specific folders results in noticeable delays. This is more likely caused by having many files in a single folder (whether duplicates or not) rather than duplicates scattered across your system.

- High Storage Usage Without Clear Explanation: Your storage usage is high but you can't identify where the space is being used. Duplicates can contribute to this, and a duplicate finder can help identify if duplicates are consuming significant space.

How to Check for Duplicates (Quick Method)

While a comprehensive duplicate scan requires a dedicated tool, you can perform a quick check using Finder:

- Open Finder and navigate to a folder you suspect might contain duplicates (like Downloads, Documents, or Desktop).

- Use the View menu to sort files by name or size.

- Look for files with identical or very similar names, especially those with numbers appended (like "document.pdf", "document 1.pdf", "document 2.pdf").

- Check file sizes—duplicates will have identical file sizes, which is a strong indicator.

For a comprehensive scan across your entire system, use a dedicated duplicate finder tool that can perform content-based comparison to identify all duplicates efficiently, regardless of filename or location.

How to Manage Duplicate Files

If you've identified that duplicates are consuming significant storage space or causing workflow issues, here are steps to manage them effectively:

Step 1: Find Duplicates Using a Dedicated Tool

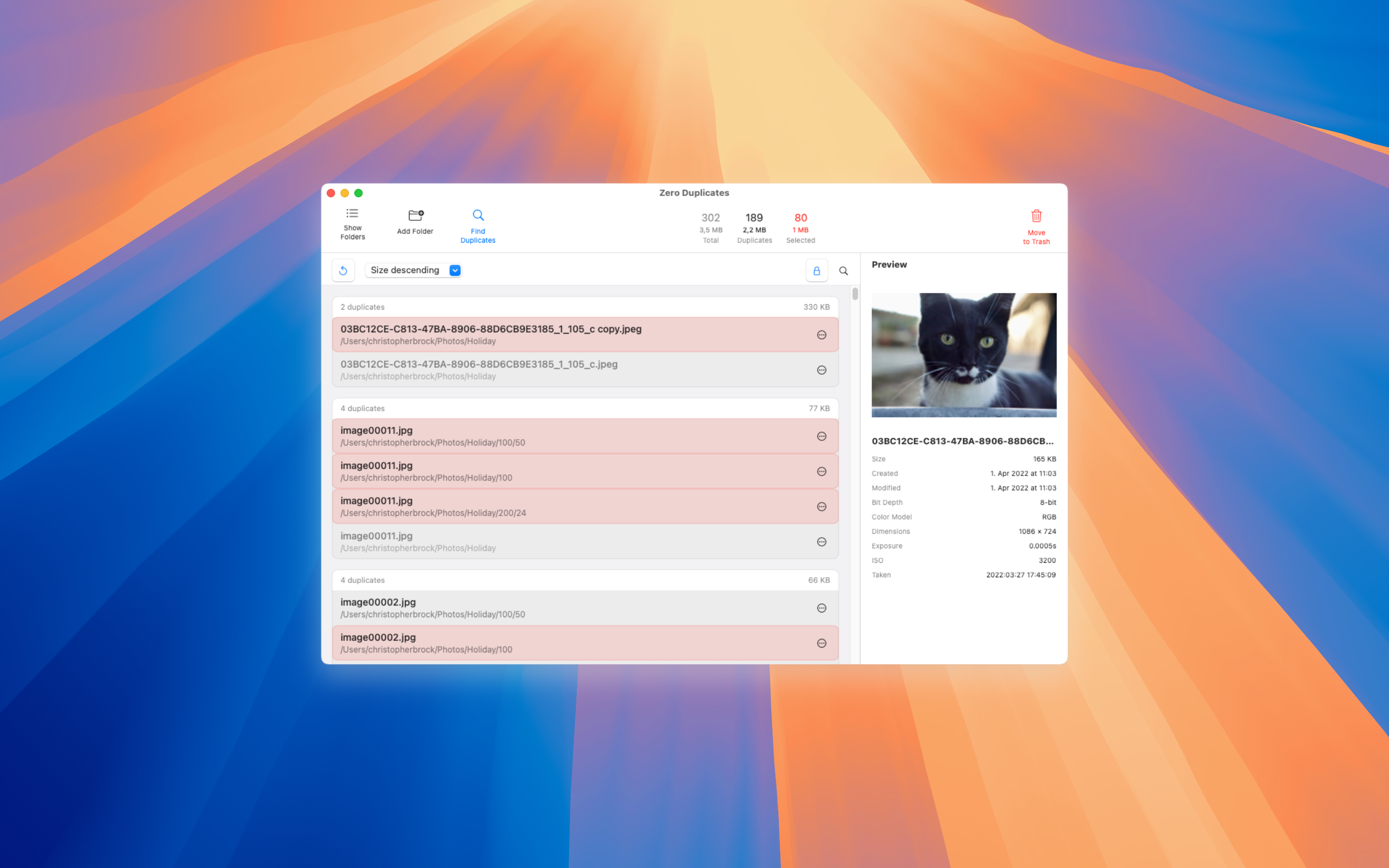

To efficiently identify duplicate files across your system, you'll need a tool that can perform content-based comparison (not just filename matching). Tools like Zero Duplicates are designed for this purpose:

- Content-Based Detection: A good duplicate finder uses content-based detection (comparing file hashes or content) rather than just filenames, which means it can find duplicates even when files have different names or locations.

- Focus on Large Files: If your primary goal is storage recovery, prioritize removing larger duplicate files first, as they have the most significant impact on storage space.

- Preview Before Deleting: Always preview files before deletion to verify contents and ensure you're keeping the correct version. This is especially important for documents or files that might have been modified at different times.

Step 2: Remove Duplicates Safely

Safe duplicate removal requires careful attention to ensure you don't accidentally delete important files:

- Keep Originals, Delete Duplicates: Always verify that at least one copy of each file is preserved. Good duplicate finders have safeguards to prevent deleting all copies, but you should always double-check important files.

- Verify Important Files: Before batch deletion, review critical documents, photos, or project files to ensure you're keeping the correct versions. Pay special attention to files that might have been modified at different times—the "newest" file isn't always the one you want to keep.

- Use Preview Feature: Take advantage of preview functionality to examine file contents before deletion, especially for documents or media files where the "original" might not be obvious from the filename alone.

- Start Small: Consider starting with a specific folder or directory rather than scanning your entire system at once. This allows you to get comfortable with the process and verify results before proceeding.

Step 3: Optimize After Cleanup

After removing duplicates, perform these optimization steps to ensure your Mac fully benefits from the cleanup:

- Rebuild Spotlight Index (if needed): If you've removed a large number of files and want to ensure Spotlight is up to date, you can rebuild the index. Open Terminal and run:

sudo mdutil -E /. Note that this is rarely necessary—Spotlight automatically updates when files are deleted. Rebuilding the index can take considerable time and may temporarily slow down searches while it rebuilds. - Monitor Storage Space: After removing duplicates, check your available storage space to verify the amount of space recovered. This gives you a clear measure of the impact.

- Verify System Behavior: After cleanup, use your system normally for a few days to verify that everything is working as expected and that no important files were accidentally removed.

💡 Realistic Expectations: Removing duplicates will primarily free up storage space. The impact on system performance (if any) will be most noticeable if you had an extreme number of duplicates or were experiencing storage-related slowdowns. On modern Macs with adequate storage, you may not notice significant performance changes after removing duplicates.

Preventing Duplicate Accumulation

Once you've cleaned up duplicates, these practices can help prevent them from accumulating again:

- Regular Storage Maintenance: Set a reminder (monthly or quarterly) to check your storage usage and scan for duplicates if needed. This helps prevent gradual accumulation over time.

- Monitor Storage Usage: Keep an eye on your disk space usage through System Settings. Sudden increases in storage consumption without adding new files might indicate duplicate accumulation or other storage issues.

- Best Practices for File Organization: Develop a consistent file naming convention and organization system. Use descriptive names and avoid saving the same file multiple times with different names. Consider using version control or document management systems for important files.

- Be Careful with Cloud Sync: If you use multiple cloud storage services (iCloud, Dropbox, Google Drive), be cautious about syncing the same folders to multiple services, as this can create duplicate copies across different cloud storage locations.

- Use Save-As Carefully: When saving files, be mindful of whether you're creating a new copy or overwriting an existing file. Many applications' "Save As" dialogs default to creating new files rather than overwriting.

Other Performance Optimization Tips

Managing duplicates is one aspect of maintaining your Mac, and it works best when combined with other optimization strategies:

- Storage Optimization: Combine duplicate removal with other storage optimization techniques. Regularly delete unused files, clear caches, and manage large media files.

- Automatic Maintenance: Set up automatic trash emptying to prevent unnecessary files from accumulating in your Trash folder, which still consumes disk space until emptied.

- System Updates: Keep macOS and your applications updated. Apple regularly releases performance improvements and optimizations that can enhance system responsiveness.

- RAM and Storage Considerations: If performance issues persist after cleanup, consider whether your Mac has sufficient RAM or if upgrading to faster storage (like an SSD) would provide additional benefits.

What to Expect After Removing Duplicates

After removing duplicate files, here's what you can realistically expect:

- Storage Space Recovery: This is the most tangible and guaranteed benefit. You'll free up disk space equal to the size of the duplicates you removed (minus any space already saved by APFS deduplication).

- Cleaner Search Results: Spotlight searches will return fewer duplicate results, making it easier to find the specific file you need. This is a usability improvement rather than a performance one.

- Reduced Backup Scan Times (Potential): If you removed a large number of duplicates, Time Machine backup scans may complete faster, though the impact depends on how many files were removed and other factors like drive speed.

- Improved System Responsiveness (If Storage Was Low): If your system was experiencing slowdowns due to very low storage space, removing duplicates and freeing up space may improve overall responsiveness. However, if you had adequate storage space, you may not notice significant performance changes.

- Application Launch Times (Minimal Impact): Apps that scan specific directories may launch slightly faster if you removed duplicates from those directories, but the impact is usually minimal unless you removed a very large number of files from an app's monitored directories.

💡 Realistic Expectations: The primary benefit of removing duplicates is storage space recovery. Performance improvements are typically modest and most noticeable when duplicates were combined with other issues like low storage space. If your Mac was already running well with adequate storage, removing duplicates may not result in noticeable performance changes.

Conclusion

Duplicate files primarily waste storage space, which is the most tangible and measurable impact. The performance impact of duplicates on modern macOS is more nuanced than often claimed. APFS uses file cloning to share content blocks when files are duplicated, Time Machine uses hard links and deduplication in backups, and modern macOS handles large file counts efficiently.

However, duplicates can still contribute to issues in specific scenarios: when combined with very low storage space, when present in extreme numbers (tens or hundreds of thousands), or when they clutter search results and workflow. The primary reasons to manage duplicates are storage space recovery and workflow efficiency (avoiding confusion about which file is the "correct" one).

If you identify that duplicates are consuming significant storage or causing workflow issues, using a dedicated duplicate finder tool can help you manage them effectively. Regular storage maintenance, combined with other practices like storage cleanup and automatic trash management, helps maintain an organized and efficient Mac.

Looking to Manage Duplicate Files?

If you've identified that duplicates are consuming storage space or causing workflow issues, Zero Duplicates can help you find and remove them efficiently. Our app uses content-based detection to find duplicates regardless of filename or location, making it easy to reclaim storage space and organize your files.

Photo by Frames For Your Heart on Unsplash